Better Quality Through a Test-First Approach

Connected Spaces

Project Overview

Cruise ships are responsible for the continuous monitoring of their passengers (“souls on board”) This includes guests, crew, and contingent staff. This system consisted of passenger devices as well as a distributed on-shore system that allowed real time monitoring.

The system further monitored and facilitated activities such as safety drills, real time transitions in case of problems on cruise, as well as temporary and permanent boarding and disembarkation tracking.

Problem Statement

In a complex distributed system with a lot of very different incoming feature requests, delivery and quality schedules are hard to meet. Our client needed a technically excellent implementation team that knew how to test the code, and how it was delivered and deployed.

Project Engagement

Initiation

A technical architect from Marici visited the client to understand the system from a boots-on-the-ground functional and a project management perspective. We arranged a series of discussions between our tech architect and system leads to understand the project components and how they fit together.

These were recorded and annotated for reuse as needed.

Parallel to this was a series of discussions between our test organizations to understand extant challenges and system quirks. Having Test as part of the initial requirement gathering is key to our test-first strategy.

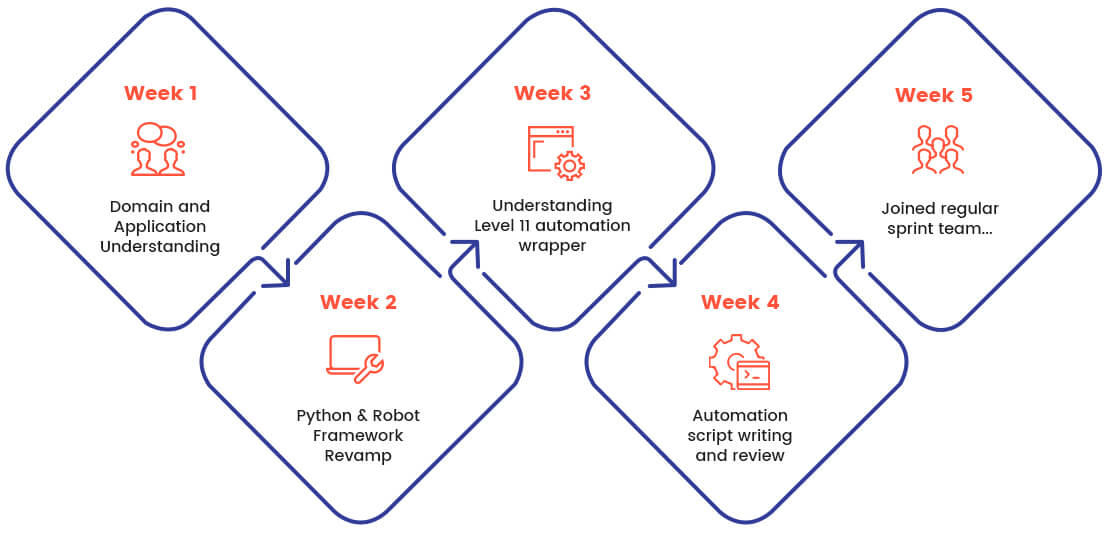

Ramp Up

By extending the team with Marici, our client expected to increase overall test coverage and surface new ideas around test strategy. In 4 weeks, we collected relevant information around extant test infrastructure and use cases, ramped up the test team on this, and began cadence planning outside of what the development teams were preparing.

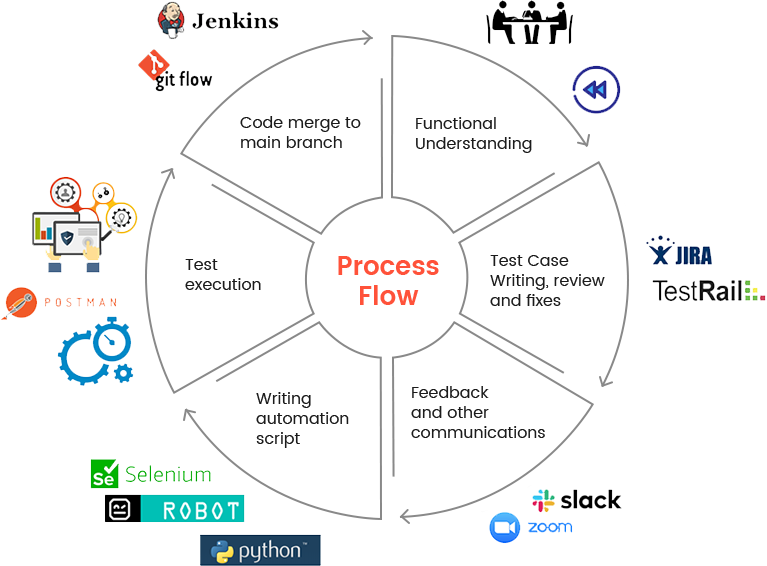

Collaboration

In a collaboration across time zones, it was very important to be deliberate about when to use synchronous communication. We did this through initial use of regular synch calls, that eventually mostly moved towards reliance on JIRA, email, and other tools as well as judicious use of video calls for things like deep technical discussions.

Email summaries were also provided both weekly and per meeting so that everyone was in sync.

Marici Test Approach

Marici believes that the relationship between dev and test is not an adversarial one; it is a co-equal role where each

provides a service to the other. While dev is ultimately accountable for deployment to production, test is accountable for both the technical and functional quality of the final product.

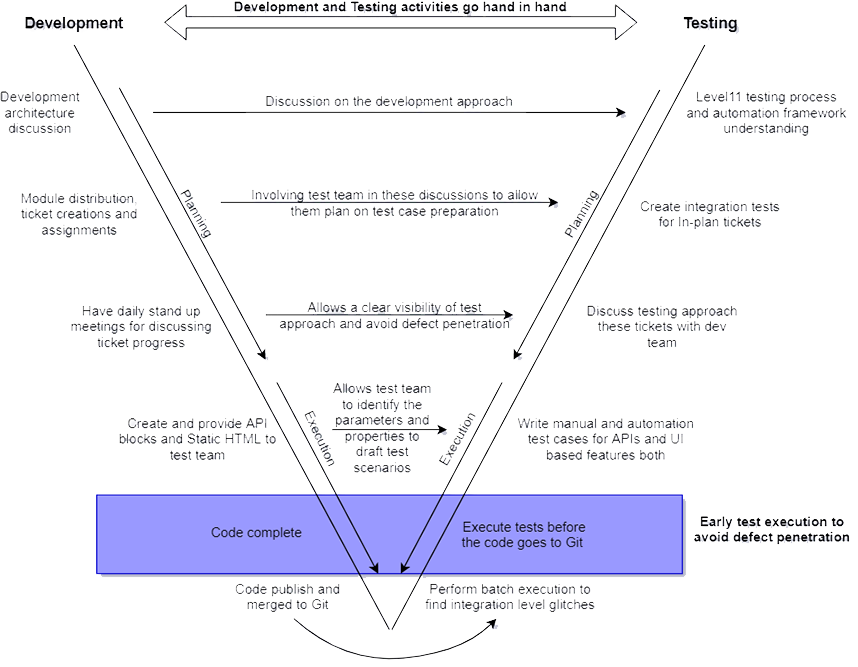

Our test-first approach is a customized ‘V’ Model that is modified on a per-project basis with two goals in mind:

- Test should appropriately test functional correctness, performance, resistance to failure, and technical soundness, including testability.

- The above must be done such that the test activity is of a size and stringency appropriate to the size and mission criticality of the project in question.

While our Technical Architect worked to understand the application architecture, our Test Architect worked to craft a testing strategy given the incoming information.

Several other activities also occurred in parallel as illustrated below. As can be seen here, every deliverable by one team is used to inform the deliverable of the other, creating good symbiosis and ensuring that a lot of the cross talk that typically has to occur can be obviated.

Absorbing New Tools and Processes

The amount of heterogeneity in test strategies is higher than the number of custom libraries typically seen in development projects. This project used a different framework in automation, which is called Robot Framework. Program/Automation created using Robot Framework can be easily understood by anyone who even does not understand programming. The code is written in plain English language which makes it easy to understand what the code does. It is also termed as ‘Business Driven Development. Because of a very mature approach to the test cycle, we were able to plan for both knowledge transfer of Robot in time for its use in test execution in the first cycle.

Deliverables

- Functional User Interface and API test cases in TestRail

- Execution reports of manual test executions with their observations on actual outcomes in TestRail

- Execution reports of API tests that were performed using Postman with their observations on actual outcomes in TestRail

- Execution reports of functional tests that were performed using Selenium and Python in combination with Robot Framework, which had the Level 11 architecture wrapped for custom reporting

- Automation scripts checked in to the Git Repository

- Daily and Weekly status reports on work done along with worklogs for marking presence

What Did We Cover?

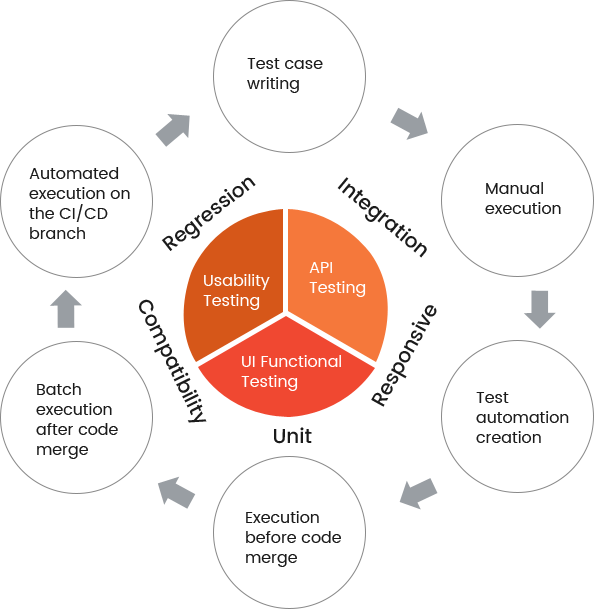

During the development cycle, we identified, wrote, executed and delivered test cases of different types and at different levels.

- Unit tests: Ideally is done by development team members. Here it was written by development team members but managed by the automation team taking their inputs into consideration. The coverage and parameters were made visible to the automation team for better understanding.

- Regression tests: For all specific functionalities we had separate regression test suites for APIs and Functional UIs as well. These regression tests were executed manually and were automated as well.

- Integration tests: To make sure that all modules work and talk well together, we identified and wrote Integration tests as well. Even these tests were written for manual and automated testing.

- Compatibility tests: Mostly for any application we need to check whether it would work in the same manner on other configurations as well. That said, we performed cross browser testing for the web application, on different

combinations of Operating System + Browser. There were visible differences for third party components which was a good find for us. - Responsive tests: While talking about testing the application, especially mobile device or web, we are not sure on the screen resolution of the device being used. That said, the elements on the application should be responsive to the change in resolutions of different browsers used on different devices. We need to pick the widely used resolutions to perform these checks and cover most of the user crowd.

Conclusion

Making sure that we helped Level 11 deliver at the speed and quality that their customers expected from them was front and centre of our mission. Everything we did had to answer yes to the question “Does this help the mission?”

Key Takeaways

- Continuous collaboration of development team right from the start of the project led to early understanding of the system and minimized the gaps.

- Providing visibility on the test approach to the development team before or during the development, allowed them to place proper validations in their code.

- Internal handholding and aggressive test involvement gave the development team a confidence to rely on the test team before pushing the code to merge with Level 11 version, by requesting test team to conduct quality checks.

- The approach of performing quality checks, both manually and in an automated way, on not only UI front but by checking the API responses as well, provided an assurance that the API and UI are communicating the way they should be.

- Collaboration and trust setup between Marici and Level 11 teams of both business units, Development and Test teams made us perform more than best to our capabilities.

Outcomes

Marici team delivered 90+ test automation scripts per SDET on an average in just 8 months. This was not only the key problem solver for the Level 11 test team, but was a great achievement for us as well, considering the fact that we had a lot of additional tasks like Manual test case writing, reviews, test executions, bug reporting for manual executions, automation script writing, code reviews, pre-build executions and finally analysis of batch executions.

The confidence in the technical soundness of the system allowed strategic investments in other areas, such as the improved customer experience and in-cabin gaming and entertainment.